This article is part of the ever growing catalog of publications around the topic of Artificial Intelligence (AI for short).

Glossary

- MCP: Model Context Protocol

- LLM: Large Language Model

- API: Application Programming Interface

- URI: Uniform Resource Identifier

What is MCP?

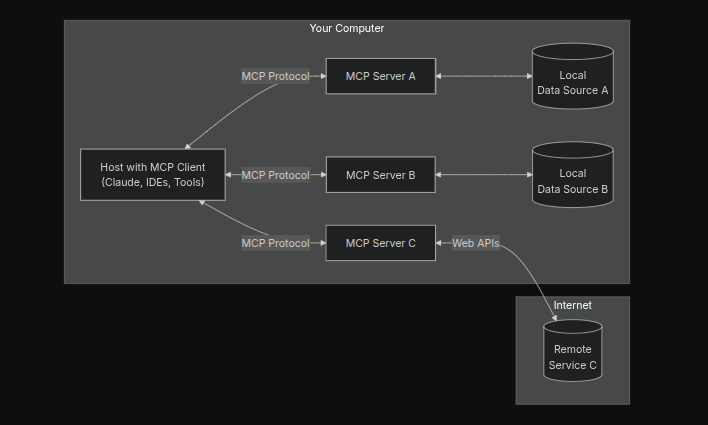

The Model Context Protocol (MCP) is an open standard, developed by Anthropic, designed to connect AI assistants—especially LLMs—to the systems where data lives, such as content repositories, business tools, and development environments. Its main goal is to standardize how applications provide context, previously only achievable through some sort of RAG, to LLMs. MCP’s core innovation is providing a universal, structured way for AI models to access and interact with external data, replacing fragmented, custom integrations with a single, scalable protocol.

The need

With the green field innovation open for AI and LLM powered applications, developers started to integrate manually their applications with LLMs, often using remote API calls to services like OpenAI and Claude. As the applications grew in complexity, integrations with LLms also increased their requirements and footprint, creating an environment in which every LLM would require very specific parameters for configuration, access and integration.

With that, comes MCP, an attempt at standardizing how applications interface with LLMs, creating for LLMs, in very simplified terms, a form of REST/API contract, allowing for the abstraction of LLM specific requirements in a standard format so integrations could become more alike than different.

Key Features

With a very detailed and rigorous definition for principles such as Data Privacy and Tool Safety, as defined in Anthropic’s MCP Specification, key features of this protocol are:

- Contextual Data Access: MCP lets AI models securely access a wide range of data, from files and databases to live system feeds and APIs, all identified by URIs;

- Semantic Interoperability: It preserves the meaning and relationships within data, not just the raw information;

- Real-Time Sync: Supports dynamic, real-time updates between models and data sources;

- Cross-Domain Compatibility: Works across industries and tech stacks;

- Security & User Control: Emphasizes user consent and fine-grained control over what data is shared and how it’s used.

When to use MCP

MCP is best suited for scenarios where:

- AI models need secure, flexible, and real-time access to diverse external data sources;

- Interoperability and scalability are required across multiple systems or domains;

- There’s a need to preserve data context and semantic relationships, not just transfer raw data.

Cons

Like any other technology, the usage of MCPs should be considered given the requirements or goals to be achieved. When it comes to LLMs, not all applications have a clear definition of their AI related needs nor LLMs are a silver bullet that can simply make your program better. Certainly developer tools can take advantage of it for a variety of use cases, as seen by Copilot which is marketed as your AI pair programmer, but not all applications need AI to deliver value to their users.

When it comes to evaluating the requirement for MCP, certain aspects are recommended to be taken into account:

- Implementation Overhead: Adopting MCP may require significant initial setup, especially for legacy systems.

- Evolving Standard: As a relatively new protocol, documentation and tooling are still maturing, which may impact early adopters

- Security Risks: The broad access MCP enables also increases the need for robust security and user controls. A very recent case was Github’s exploited through MCP for accessing private repositories.

- Resource Management: Server authors must handle various resource selection patterns, which can add complexity. LLMs are very resource intensive, generally with high power demand and processing intensive. Training your own model is even more expensive, for instance Elon Musk spent roughly $10 billion on AI training hardware in 2024, and that is hardware alone, not even the energy cost for keeping the hardware up and active as well as the personnel needed for maintaing such infrastructure.

Final Considerations

MCP is rapidly becoming a cornerstone for modern AI applications, enabling LLMs and other AI systems to break free from data silos and deliver richer, more actionable insights. It’s especially powerful in environments where context, interoperability, and real-time data are critical. However, careful planning around security, resource management, and implementation is essential to realize its full benefits.

See ya! 👋